Uptime Secrets: 7 Proven Strategies for 99.9% Performance

In today’s digital world, every second counts. When your website or application goes down, so does your revenue, reputation, and user trust. That’s why Uptime isn’t just a metric—it’s a mission. Let’s dive into what Uptime really means and how top companies keep their systems running like clockwork.

What Is Uptime and Why It Matters

Uptime is the measure of how long a system, server, or service remains operational and accessible over a given period. It’s typically expressed as a percentage of availability, with 100% meaning the system never fails. For businesses relying on digital infrastructure, Uptime is directly tied to customer satisfaction, SEO rankings, and revenue generation.

Defining Uptime in Technical Terms

In IT and network operations, Uptime refers to the continuous period during which a machine or system performs its required functions without interruption. This is often contrasted with downtime, which includes any time the system is unavailable due to maintenance, crashes, or outages.

- Uptime is commonly measured using monitoring tools like Pingdom, UptimeRobot, or Datadog.

- It is calculated as:

(Total Time - Downtime) / Total Time × 100%. - For example, 99.9% Uptime allows only 8.76 hours of downtime per year.

The Business Impact of High Uptime

High Uptime isn’t just a technical bragging right—it has real financial and reputational consequences. A study by Gartner estimates that the average cost of IT downtime is $5,600 per minute, which can quickly escalate for large enterprises.

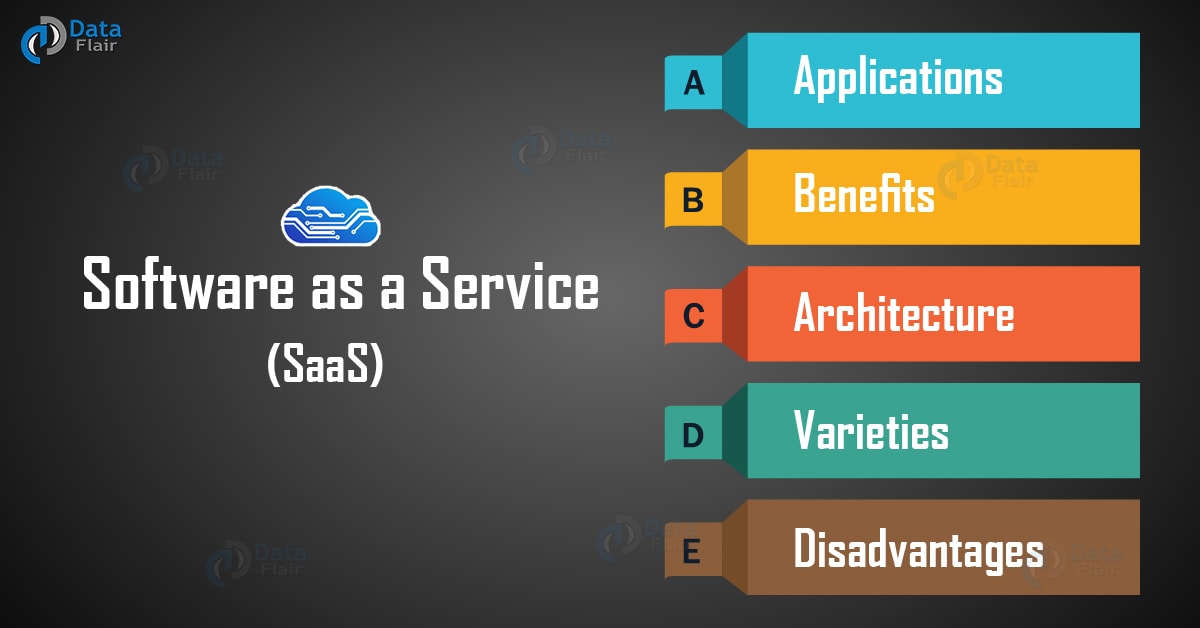

- E-commerce sites lose sales directly during outages.

- SaaS platforms risk violating SLAs (Service Level Agreements), leading to penalties.

- Search engines like Google factor site availability into ranking algorithms.

“Availability is not a feature. It’s a prerequisite.” — Ben Treynor, founder of Google’s Site Reliability Engineering (SRE) team.

Uptime vs. Downtime: The Hidden Costs

While Uptime measures performance, downtime reveals vulnerabilities. Understanding the relationship between the two helps organizations prioritize reliability.

Calculating the True Cost of Downtime

Downtime costs go beyond lost transactions. They include indirect losses such as brand damage, customer churn, and recovery efforts.

- Direct Costs: Lost sales, SLA penalties, and incident response labor.

- Indirect Costs: Damage to brand reputation, reduced customer trust, and SEO penalties.

- Opportunity Costs: Missed marketing campaigns or product launches due to system failure.

For instance, Amazon’s 2018 S3 outage lasted just 4 hours but cost an estimated $150 million in lost business across dependent services.

Common Causes of Downtime

Understanding root causes is the first step in improving Uptime. The most frequent culprits include:

- Hardware Failures: Disk crashes, power supply issues, or network interface failures.

- Software Bugs: Unhandled exceptions, memory leaks, or race conditions in code.

- Human Error: Misconfigurations, accidental deletions, or deployment mistakes.

- Cyberattacks: DDoS attacks, ransomware, or credential breaches.

- Natural Disasters: Floods, fires, or earthquakes affecting data centers.

According to Cisco’s Annual Cybersecurity Report, human error accounts for over 20% of security incidents leading to downtime.

Industry Standards for Uptime: What 99.9% Really Means

Service providers often advertise Uptime guarantees using percentages like 99%, 99.9%, or even 99.999%. But what do these numbers actually mean in real-world terms?

Understanding the ‘Nines’ of Uptime

The ‘nines’ refer to the number of 9s in the Uptime percentage. Each additional nine represents a tenfold reduction in allowable downtime.

- 99% Uptime: ~3.65 days of downtime per year

- 99.9% Uptime: ~8.77 hours per year (common for mid-tier SaaS)

- 99.99% Uptime: ~52.6 minutes per year (enterprise-grade)

- 99.999% Uptime: ~5.26 minutes per year (ultra-reliable systems)

These levels are often tied to Service Level Agreements (SLAs), which define compensation if Uptime falls below the promised threshold.

SLAs and Uptime Guarantees

An SLA is a contractual agreement between a service provider and a customer that specifies the expected level of service, including Uptime. For example:

- A cloud hosting provider might offer a 99.95% Uptime SLA.

- If the service drops below this, customers may receive service credits.

- SLAs often exclude scheduled maintenance or force majeure events.

It’s crucial to read the fine print. Some providers define Uptime based on API response codes, while others use synthetic monitoring from specific regions.

How Top Companies Achieve Maximum Uptime

Leading tech companies like Google, AWS, and Netflix don’t achieve high Uptime by accident. They use a combination of engineering discipline, automation, and proactive monitoring.

Site Reliability Engineering (SRE) at Google

Google pioneered the concept of Site Reliability Engineering (SRE), a discipline that applies software engineering principles to operations. SRE teams are responsible for ensuring high Uptime while balancing feature development and system stability.

- SREs define Error Budgets: the maximum allowable downtime before action is required.

- If the error budget is exhausted, new feature deployments are paused to focus on reliability.

- This creates a feedback loop between development and operations.

Learn more about Google’s SRE practices in their free book: Site Reliability Engineering.

Amazon Web Services (AWS) and Fault Tolerance

AWS designs its infrastructure for high Uptime using redundancy, auto-scaling, and global availability zones. Key strategies include:

- Multi-AZ Deployments: Running applications across multiple Availability Zones to survive data center failures.

- Auto-Recovery Systems: Automatically replacing failed instances without human intervention.

- Global Edge Network: Using CloudFront to cache content closer to users, reducing dependency on origin servers.

AWS’s Uptime across core services like EC2 and S3 consistently exceeds 99.9%, as reported in their Service Health Dashboard.

Monitoring and Measuring Uptime Effectively

You can’t improve what you don’t measure. Effective Uptime monitoring requires the right tools, metrics, and alerting strategies.

Essential Uptime Monitoring Tools

There are numerous tools available to track Uptime, each with different strengths:

- Pingdom: Offers real-user monitoring and transaction checks. Great for websites and APIs.

- Datadog: Provides full-stack observability, including logs, metrics, and traces.

- UptimeRobot: A cost-effective solution for small to medium businesses with basic monitoring needs.

- Nagios: Open-source tool ideal for on-premise infrastructure monitoring.

Choosing the right tool depends on your infrastructure complexity, budget, and required granularity.

Key Metrics Beyond Uptime

While Uptime is critical, it should be part of a broader set of reliability metrics:

- Mean Time Between Failures (MTBF): Average time between system breakdowns.

- Mean Time to Repair (MTTR): Average time to restore service after a failure.

- Latency: Response time of your system, which affects perceived performance.

- Error Rates: Percentage of failed requests vs. total requests.

For example, a system can have 99.9% Uptime but still suffer from high latency, leading to poor user experience.

Strategies to Improve Your System’s Uptime

Improving Uptime isn’t about one magic fix—it’s about implementing layered strategies that reduce risk and increase resilience.

Implement Redundancy and Failover Systems

Redundancy ensures that if one component fails, another can take over seamlessly. This applies at every level:

- Server Redundancy: Use load balancers to distribute traffic across multiple servers.

- Database Replication: Maintain primary and secondary databases in different locations.

- Network Redundancy: Multiple internet providers or backup connections.

Failover mechanisms should be automated to minimize human intervention during outages.

Automate Deployments and Rollbacks

Manual deployments are a major source of human error. Automation reduces risk and increases consistency.

- Use CI/CD pipelines (e.g., Jenkins, GitHub Actions, GitLab CI) to automate testing and deployment.

- Implement blue-green deployments or canary releases to test changes on a small subset of users.

- Enable automatic rollbacks if health checks fail post-deployment.

Netflix’s Spinnaker platform is a great example of automated, reliable deployment at scale.

Conduct Regular Disaster Recovery Drills

Having a disaster recovery plan isn’t enough—you must test it regularly.

- Simulate server crashes, network outages, or data corruption.

- Measure how quickly your team can restore service.

- Document lessons learned and update procedures accordingly.

Regular drills build muscle memory and expose hidden weaknesses in your infrastructure.

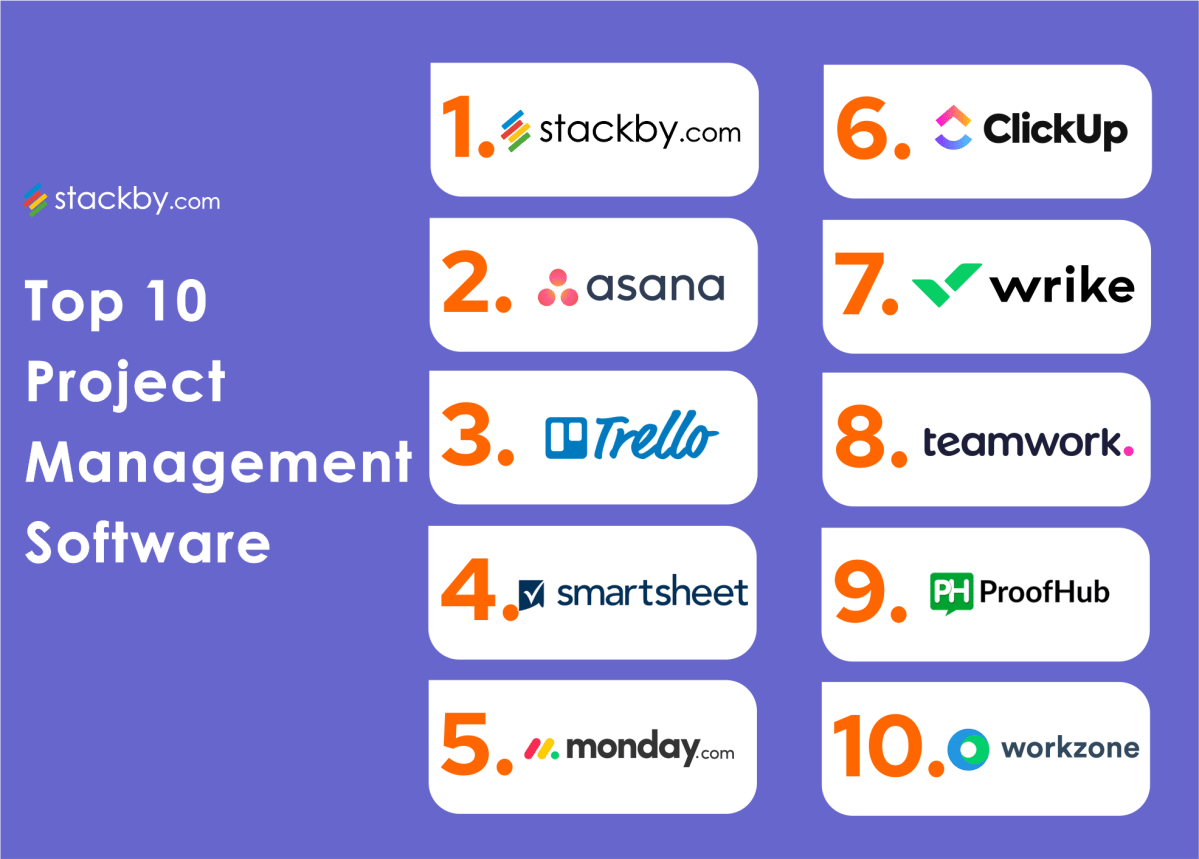

The Role of Cloud Providers in Ensuring Uptime

Cloud computing has revolutionized how organizations manage Uptime. Instead of relying on in-house data centers, businesses can leverage the scale and expertise of cloud providers.

Benefits of Cloud-Based Uptime Management

Cloud platforms offer several advantages over traditional on-premise setups:

- Global Infrastructure: Data centers in multiple regions reduce latency and increase fault tolerance.

- Automatic Scaling: Handle traffic spikes without manual intervention.

- Built-in Redundancy: Replication across zones and regions is often enabled by default.

- Expert Maintenance: Cloud providers handle hardware upgrades, security patches, and network optimization.

For example, Microsoft Azure offers a 99.95% Uptime SLA for its virtual machines when deployed in availability sets.

Choosing the Right Cloud Provider for Uptime

Not all cloud providers are equal when it comes to reliability. Consider the following when selecting a provider:

- SLA Terms: What Uptime percentage is guaranteed? What compensation is offered?

- Geographic Coverage: Does the provider have data centers near your users?

- Service History: Check public outage reports and status pages.

- Support Options: 24/7 support can be crucial during critical incidents.

Providers like AWS, Google Cloud, and Azure all offer robust Uptime, but your specific workload and compliance needs may influence your choice.

Future Trends in Uptime and System Reliability

As technology evolves, so do the methods for ensuring high Uptime. Emerging trends are shaping the future of system reliability.

AI-Powered Predictive Maintenance

Artificial Intelligence is being used to predict failures before they happen. By analyzing logs, metrics, and user behavior, AI models can detect anomalies and trigger preventive actions.

- Google uses machine learning to predict disk failures in its data centers.

- Tools like Splunk and Dynatrace offer AI-driven root cause analysis.

- Predictive alerts can reduce MTTR by directing teams to the exact source of an issue.

This shift from reactive to proactive maintenance is a game-changer for Uptime optimization.

Edge Computing and Uptime

Edge computing brings processing closer to the user, reducing latency and dependency on central servers. This can improve perceived Uptime, even if the core system experiences issues.

- Content Delivery Networks (CDNs) like Cloudflare and Akamai cache static assets at the edge.

- Edge functions (e.g., Cloudflare Workers) allow logic to run closer to users.

- If the origin server goes down, edge caches can serve stale content temporarily.

This resilience makes edge computing a powerful tool for maintaining availability during backend outages.

Zero Trust Architecture and Security-Driven Uptime

Security breaches are a major cause of downtime. Zero Trust Architecture (ZTA) assumes no user or device is trusted by default, reducing the risk of lateral movement during attacks.

- Micro-segmentation limits the blast radius of a breach.

- Continuous authentication ensures only legitimate users access systems.

- Secure access service edge (SASE) combines ZTA with cloud networking for resilient access.

By preventing breaches, Zero Trust indirectly improves Uptime by eliminating one of the most disruptive causes of downtime.

What is Uptime and why is it important?

Uptime measures how long a system remains operational and accessible. It’s crucial because downtime leads to lost revenue, damaged reputation, and poor user experience. High Uptime ensures reliability, supports SEO, and fulfills SLA commitments.

How is Uptime calculated?

Uptime is calculated as: (Total Time – Downtime) / Total Time × 100%. For example, 99.9% Uptime means the system is down for no more than 8.76 hours per year.

What is considered good Uptime?

For most businesses, 99.9% Uptime is considered good. Enterprise systems often aim for 99.99% or higher. The ideal level depends on your industry, SLAs, and customer expectations.

How can I monitor my website’s Uptime?

You can use tools like Pingdom, UptimeRobot, or Datadog to monitor your website’s availability. These services send regular pings and alert you if your site becomes unreachable.

Can Uptime be 100%?

Theoretically, 100% Uptime is possible but practically unattainable due to unforeseen events like natural disasters, hardware failures, or software bugs. Most organizations aim for ‘five nines’ (99.999%) as the gold standard.

Uptime is more than a number—it’s a reflection of your organization’s reliability, technical maturity, and commitment to users. From understanding the cost of downtime to leveraging cloud infrastructure and AI-driven monitoring, achieving high Uptime requires a strategic, multi-layered approach. By adopting best practices from industry leaders, investing in the right tools, and continuously testing your systems, you can build a resilient digital presence that users can count on—every second of every day.

Further Reading: